End of an Era

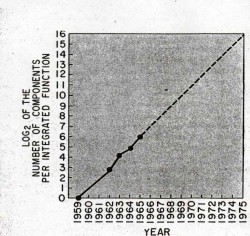

I was going to title this post "The End of Moore's Law," but that would not be quite right. What is happening is that increases in processor speeds have slowed or even stopped. Without faster processors, it is hard to take advantage of increases in memory capacity. Here is the reference to the National Research Council's preliminary report. Initially I all but ignored the news of this report, but then I happened to hear a talk by a researcher at Nvidia, who said the same thing. Nvidia's answer is to make processors "wider" not "faster" (i.e. ,more parallelism). Nvidia, a maker of highly-parallel graphics processors, has a stake in moving computing in that direction, but still.

I was going to title this post "The End of Moore's Law," but that would not be quite right. What is happening is that increases in processor speeds have slowed or even stopped. Without faster processors, it is hard to take advantage of increases in memory capacity. Here is the reference to the National Research Council's preliminary report. Initially I all but ignored the news of this report, but then I happened to hear a talk by a researcher at Nvidia, who said the same thing. Nvidia's answer is to make processors "wider" not "faster" (i.e. ,more parallelism). Nvidia, a maker of highly-parallel graphics processors, has a stake in moving computing in that direction, but still.

I'll leave this debate to others, but if Moore's Law is coming to an end, what would that mean for the history of computing?